Online gamers know what it feels like. You’re playing a fast-paced, fast-clicking game such as Fortnite, and you’re about to eliminate an opponent when the game slows down; there’s no response to your frantic clicking and tapping. And by the time the lagging has ended, you’ve been eliminated and your opponent is doing the old L-finger-on-the-forehead dance. The problem is high latency. It’s a nightmare for gamers and it’s an expensive handicap for traders and businesses too.

Slow internet has any number of causes, but the three most common ones are low bandwidth (how much data over time a communication link can handle); high latency (how long it takes for a packet of data to get across the network); and low throughput (how much data is successfully sent and received).

When users today complain about ‘slow internet speeds’, the underlying problem often isn’t bandwidth; it’s latency. SEACOM, Africa’s first submarine broadband cable operator, explained this recently after facing criticism about internet speeds. ‘Since 2016 South African companies have been shifting their storage, security, communications and other business tools from on-premise to the cloud,’ the company stated. ‘Microsoft and Amazon may have arrived on our shores, but their data centres are largely serving a network function, for now, as opposed to hosting cloud services themselves. The point is that the cloud – and the servers that host the cloud’s functionality – are still physically far away in Europe and North America. Unsurprisingly, this long-distance digital migration has been accompanied by more complaints about connectivity speed as content must still be largely accessed from thousands of kilometres away.’

And while Africa is geographically far away from the main data hubs, the truth is that latency isn’t just an African problem. David Trossell, CEO of global data acceleration company Bridgeworks, says that ‘it doesn’t matter where in the world we work with customers, they all have the same problem: latency. In Africa, distances are vast between centres – places such as Johannesburg, Durban and Cape Town. They are no different to our customers in Los Angeles, Washington and New York. Latency is the same the world over’.

Businesses are only now starting to see the impact. ‘At the turn of the century, we all knew why the internet was slow,’ says Trossell. ‘The modems we were using back then were operating in the low-kilobit range. Yes, there was still latency, but that was a small portion of the overall “slowness”. Just having the ability to connect to the rest of the world, to search the web and to find things we never knew existed just masked what we deem now as the chronic performance. Today, with our high-speed fibre broadband, we now see the effects of latency kick in. This occurs when accessing sites across continents, but in reality it is no more than an irritation.’ It may be an irritation for normal internet users and online gamers, but when one scales up to an enterprise level, latency becomes a real issue.

‘It’s a major performance thief, sapping up to 90% of the bandwidth when we start to put distances between sites,’ says Trossell.

For high-speed traders who deal in fractions of microseconds, that can make all the difference. Recent calculations from the UK’s Financial Conduct Authority (FCA) found that traders who use high-speed methods to beat the rest of the stock market to deals impose what amounts to a ‘tax’ of GBP42 000 for every GBP1 billion traded. The practice, known as ‘latency arbitrage’, involves arbitraging prices gleaned with a low latency from certain exchanges. By using that split-second advantage, they’ll capture older, uncompetitive quotes ahead of rival traders.

It happens at lightning speed. According to the FCA, the average race between traders lasts just 79 microseconds (or 79 millionths of a second), with only the quickest gaining any benefit. And while each ‘race’ only yields small wins, when you extrapolate it over days, the amounts rack up quickly. The FCA tracked 2.2 billion of these races over the course of 43 trading days on the London Stock Exchange and found that more than 20% of the total trading volume came from these latency arbitrage races. Extrapolate that over a full year in the UK equity market (as the FCA did), and it adds up to about GBP60 million a year. Extrapolate it globally, and it amounts to an estimated US$5 billion.

But that’s not the only challenge when it comes to latency. Investec – which is based in South Africa but with clients in the UK and EU – recently felt the effects of high latency, but couldn’t solve the problem head-on.

To comply with data-protection legislation, Investec has to ensure that its critical system data is never more than a few hours old and its servers must be hosted in the EU. But the geographic distance between South Africa and the UK meant that it was taking them 14 to 15 hours to move those data sets. And no matter how much bandwidth Investec threw at the problem, they couldn’t get the data sets to move any faster. Bridgeworks was able to reduce transfer times to less than four hours.

Investec’s problem, Trossell explains, came down to the fundamentals of TCP/IP network communication protocols. ‘TCP/IP’s great advantage lies in ensuring every packet gets to the destination,’ he says. ‘Once the sender sends a group of packets to the destination, it waits for an acknowledgement [ACK] from the destination detailing the packets it received and those it didn’t. If some are missing, the sender will retransmit the missing packets. That acknowledgement – ACK – is what makes TCP/IP so useful.’

But that ACK quickly becomes a problem when you start putting distance between sender and destination. ‘Once the senders send the data, it sits there waiting for the ACK before it can send the next group of packets,’ says Trossell. ‘If that is 100 ms, then we can only send a bunch of packets 10 times a second. If each group of packets adds up to 2 Mb of data and we use 1 Gbps WAN [wide area network], we can only send 20 Mb per second, or 10×2 Mb. A 1 Gbps link should have a capacity of 110 Mbps. If we up the speed of the WAN to 10 Gbps, then nothing changes, as we still will only achieve 10×2 Mbps. And this is all down to the speed of light, which is just not fast enough. If that is not bad enough, light only travels at two-thirds of its normal speed in glass.’

Bridgeworks, which was called in to assist, had to find another way to improve Investec’s speeds. ‘It is not possible to solve latency, as it is governed by the speed of light,’ says Trossell. ‘You can’t reduce it other than moving the two points closer together. What you must do is work with the latency you have and increase the data flow across the WAN. In Investec’s case, we used a pair of PORTrockITs at either end and routed the traffic we wished to accelerate through these PORTrockITs. The PORTrockIT works by maximising the data throughput over the WAN, mitigating the effects of latency.’

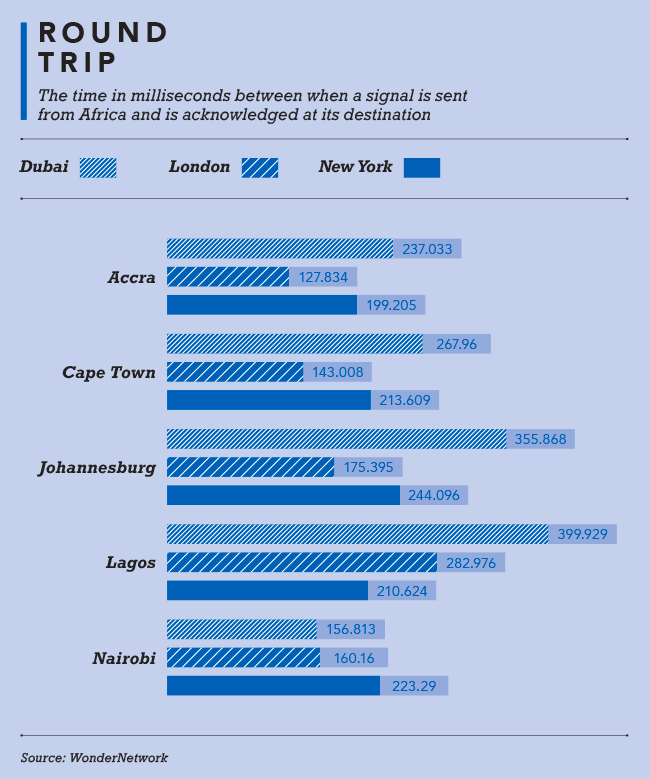

His point about geographic distance is important. No amount of technology will reduce the kilometres between Cape Town and London; Nairobi and Beijing; Luanda and Fortaleza; and between Lagos and New York. But more and more undersea cables are linking Africa to the rest of the world – including, for example, the new Transatlantic SACS cable, which allows data to make the return trip between Angola and Brazil in just 98 ms; or DARE 1, the new 4 000 km cable running into East Africa from Yemen. And as those connections employ better and smarter technologies, the virtual distances between centres will also shorten. Angola Cables’ new AngoNAP data centre in Fortaleza, Brazil, now links Miami to Fortaleza to Sangano in Angola. The digital distance from one point to the other? A mere 200 milliseconds.